Patterns of Miscommunication and Misrepresentation

By Joep van Genuchten

- 18 minutes read - 3809 wordsIn the ontological process, understanding each other is key. However, people, colleagues, misunderstanding each other is so common, so unavoidable, we accept it as part of life. In semantic discussions, however, I have noticed a couple of recurring patterns in miscommunication, that, once we are aware of them, we may be able to avoid or quickly resolve.

Some of these patterns are relatively simple to recognize, overcome and correct for. Others are much harder to identify and often have far reaching consequences for how we think we see the world and the ways we collect data about it.

This section is called Patterns of Miscommunication and Misrepresentation because all of these patterns also affect our own way of thinking: our own mental model of our reality. Think of this as a miscommunication with ourselves. Especially data modellers need to be aware of this within themselves.

Important to note: This is by no means an exhaustive list. These are just a simplified overview of patterns that I have noticed.

Synonyms across domain specific languages

The most common cause of misunderstanding actually happens before we have typically even started to communicate. We all love cursing our data silo’s, however, organizational silo’s are just as damaging to data sharing. Especially now that many organizations are, rightfully, moving towards more de-centralized data architectures, the ubiquitous language gains popularity. This ubiquitous language is explicitly aimed at local understanding. The scope of a ubiquitous language is typically suggested to be one team. This means you need to be very sure as an organization that two (or more) teams are not doing (almost) identical things. And: how will you be able to determine this, if the problem the teams are addressing, is described with different words, different languages?

Note

I am not arguing against the use and development of ubiquitous languages. They have an important role in (especially the early phases of) digitization. They also make modelling an explicit part of software development (again). This is a breath of fresh air while we are still cleaning up the mess of the bad behavior that schema-les technologies enabled.

So encourage teams to do this, but beware of the limits: with a ubiquitous language you are not done. Ubiquitous languages, in the best case scenario leave a bunch of business challenges around data un-addressed, and in the worst case create new ones.

Ultimately, ubiquitous languages are at this moment probably the best thing we can do a scale. Knowledge of how to create an organization-wide model that is emergent from these ubiquitous languages, and facilitates communication between people, is scarce and still in development. A lot of what is written here is in pursuit of that goal.

This issue is amplified, when during a lock-down, people work from home. The informal encounters that we typically have in the office, and that often allow us to uncover the overlap or similarities between our work and that of our colleagues, do not happen anymore.

We are all special snowflakes, with our own unique views of reality (and I am the most special one, otherwise I wouldn’t have had the guile to write this paper). But we do in fact share concepts with our colleagues, sometimes people that are not even in our team or department. When we do, it is important to recognize this so that we can communicate about it. This will eventually allow us to streamline the processes in the organization. Make sure we don’t do the same thing twice. Allow us to simplify our organization and our data architecture.

But…

Just because we share concepts with colleagues, does not mean we mean the same thing with the same word as all of our colleagues. The rest of this section is about the times when we think we are talking about the same thing, but in fact are not.

What something is versus what something does or means to us

A common source of misunderstanding is the difference between what something is and what something does. For example:

- A road is a collection of bricks laying side by side

- A road means for us a more energy efficient way to get from A to B.

When most people navigate from home to work, they don’t typically care about the bricks or the tarmac. They care about how the roads are connected and how they are being used (traffic jams?) and how fast we are allowed to drive on them.

Most people look at roads that way. But some people, very important people in the context of roads, care about roads in a different way. These are the people who design and maintain them. When in this role (after all these people don’t just built roads, they also use them like everyone else), they do see the bricks and the tarmac, and the concrete pillars that support the bridges. To them, what it is, physically, is more important. Their information requirement is aimed at design and maintenance of the roads. To them, a brick road is something very different from a tarmac road: you don’t fix a pothole in a tarmac road with bricks.

I live on street called Veerpolderstraat. This is my road, I use it all the time. When the bricks get replaced by tarmac, the road, in the sense of what it is has changed. It is now no longer a collection of bricks. It is now something else: a strip of tarmac. But it also still the same Veerpolderstaat. It connects in the same way to the same other roads. Simultaneously, the bricks may be reused to fix another road elsewhere. The bricks have persisted, but they now have a different us to us. They no longer form the Veerpolderstraat, they form another street, allowing people to get from A to B there.

Eventually, these two perspectives come together. This typically happens when the road is damaged to such a degree that it affects how we can use it. Is the road broken, or are the bricks broken? Both. But the moment the bricks have been fixed, we may not be able to use the road yet. Likely the people who fixed the road still need to clean up after themselves, remove redirection signs etcetera. So when we can use the road again is not purely a function of when the bricks are restored.

It is in the nature of this specific miscommunication that trends like predictive maintenance tend have such problems really taking off. The concept of predictive maintenance is both complex and complicated in and of itself, but the constant miscommunication between subject matter experts makes figuring this issue out, as a sector, that much harder.

Generalized versus specialized concepts

This pattern often leads to our inability to recognize our own information requirement in someone elses data. What happens is the following: Say I’m looking for dat about motorcycles.I look around and all I find is data about vehicles. That is close, but no cigar. So I make my own dataset from scratch.

Now I have one team that manages a data set about vehicles, and one about motorcycles. Each team assigns their own ID’s to their objects. If we really do some detective work, we will eventually find out that both teams have data about the same motor cycle. After all, we realize that a motor cycle is one specific type of vehicle and once we compare licence plate data in both sets, we might find that we do in fact ave multiple records that are about (also see section on aboutness) the same things.

Part-whole relationships

Part-whole relationships can be very useful to create a mental map of large volumes of information about a complex reality. They are a very important tool for knowledge management in organizations. However, they can also be very confusing. In this section we discuss a couple of ways in which people get lost in part-whole relationships.

Part-whole confused for generalization-specialization

A specific way in which miscommunication emerges around generalization/specialization relationship is that many people (especially those with an engineering background in my experience) mistake part/whole relationships for generalization/specialization relationships. I suspect this is related to transitivity of properties. For instance: if a car travels at 100 km/h, its engine will also travel at 100km/h. In a world where data modellers are taught to normalize information, to avoid redundancy, this might look a lot like generalization/specialization. The concept that represents the set of the things that are cars is thus confused for the concept that represents the parts that are in the car.

I have found that this translates back into the mental model people have of the world, and therefore the concepts (the mental projections) they form around it. While this can result in some particularly pesky misunderstandings, once identified, it can be easily overcome. People that typically fall into this trap are actually pretty good at analytical thinking (otherwise they wouldn’t have embarked on modelling in the first place) so once the flaw in their think has been pointed out, they are quite capable at adjusting their mental models accordingly.

Forgetting that part-whole is perspective dependent

In engineering, we are drilled in thinking in terms of system breakdown structures. In organizations, we tend to think of departments and teams. As humans, we like thinking-up tree structures to facilitate our understanding of the world. These trees often describe part whole relationships (a car has wheels, A department has teams, teams have people). However, how we form these trees is dependent on our perspective.

Lets go back to the example of the road earlier. Physically speaking, a road is made up of bricks. Functionally, that road might have bike lanes demarcated by painted lines. Those painted lines might run right over a brick. The mistake we often make is to think that we can make a single part-whole tree of this road. Typically we come up with this

- Road

- Has lane types

- Car Lanes

- has Lanes

- Car lane north bound

- Has bricks

- brick 1

- brick 2

- Has bricks

- Car lane south bound

- Has bricks

- brick 3

- brick 4

- Has bricks

- Car lane north bound

- has Lanes

- Bike Lanes

- has Lanes

- Bike lane north bound

- Has bricks

- brick 5

- brick 6

- Has bricks

- Bike lane south bound

- Has bricks

- brick 7

- brick 8

- Has bricks

- Bike lane north bound

- has Lanes

- Car Lanes

- Has lane types

What typically happens next is the following. The person who has just paved the road and needs to do the data registration of how the road was made, runs into the problem that brick 9 has a line over it. It is part of both ‘Bike lane south bound’ and ‘Car lane south bound’. To which lane does it belong? This person decides to assign it to the lane that has the largest portion of the brick in it. Their colleague, further down the road, decides to assign a brick in a similar situation to the lane that is ‘most in the middle’. After all: sometimes it is really close and hard to tell. A third colleague just assigns it to both. Now we have data inconsistency.

Note

A typical first reflex to this issue is to create a ‘business rule’ that standardizes the way we deal with this situation: always assign the brick to the most middle lane. Spoiler alert: This is not the solution to this problem

Hold on: A Data Quality issue over what exactly? Why does it matter that we know which brick is in which lane? It seems to matter, because that is how we defined our system break down structure. However, if we accept that there might be 2 perspectives on the road (see section on: ‘What something is versus what something does or means to us’), we can resolve this issue. The Road-Brick breakdown structure represents a physical breakdown on the road. The Road-Lane perspective represents a functional breakdown of the road. They do not need to be the same, nor do we need to unify them in a single tree. In fact, trying to force them into the same tree creates all sorts of administrative… BS… By recognizing there are multiple perspectives and making them explicit, we simplify the data registration process and we avoid ambiguity. These are typically things that benefit the data quality.

You might ask: but what if I want to know how many bricks are in the bike lane? My response would be to ask: Why do you want to know this? What purpose would that insight serve? What conclusion would you want to draw from it? If a single brick gets damaged to such an extend that it needs to be replaced, you probably still have to close multiple lanes down to allow the workers the room to do their work safely. If you want to calculate the surface area of the lanes: are bricks really the best way of doing that? These are values that, at first glance may seem relevant. But as you look at your actual information requirement, the thing you actually want to know, they are often just proxies to an information requirement for which there are better ways of deriving them.

The lesson to take away from this is that breakdown structures can be very helpful to organize a lot of information about a complex system in our limited brains, but they are perspective dependent.

Temporal confusion: event vs activity vs state

In the grand scheme of things, there are more things that we might want to talk about that we have words in our natural languages. One typical example in my work has been the (natural language) term ‘malfunction’. When trying to determine the information requirement around ‘malfunction’ we will soon realize there is an event to this (the moment of malfunctioning) and then there is a state to this (the period of time when the thing is malfunctioning until its fixed).

Aboutness

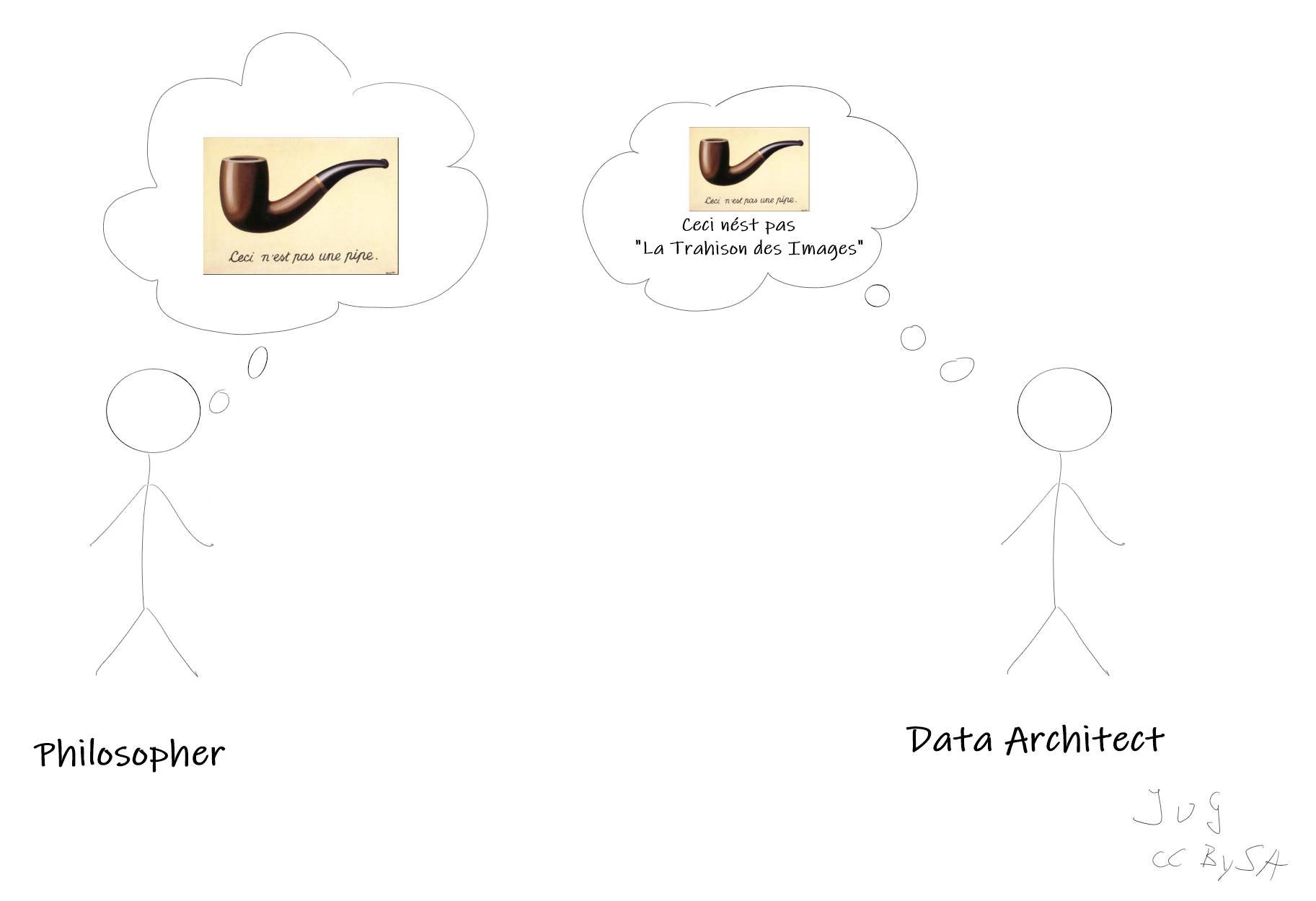

This is a source of confusion that we as people can often overcome with intuition, but computers get very confused by it. Aboutness is a subject that studies the nature of the relationship between a representation (say a picture) and that which it represents.

A famous art piece depicting this is Magritte’s ‘La Trahison des Images’. All though in the context of data architecture, it gets a couple of extra layers:

The challenge with this pattern of miscommunication becomes evident when we start to deal with metadata (data about data). And specifcally, when dealing with audits (metadata at the record level). Consider the following table with data about people.

| id | first name | last name | age |

|---|---|---|---|

| dfvsrg3r | Jane | Doe | 56 |

| sdgwy45y | Jon | Doe | 62 |

| we1345 | Jane | Cash | 53 |

Now lets say I make a copy of the first record:

| id | first name | last name | age |

|---|---|---|---|

| dfvsrg3r | Jane | Doe | 56 |

To illustrate how computers get confused by this. Lets ask about the lineage of the copied record. If whe look at Prov-o, it says: a prov:Entity can have a prov:wasDerivedFrom another prov:Entity. The only entity we have here is the ID of person. This would result in the expression like dfvsrg3r prov:wasDerivedFrom dfvsrg3r. This doesn’t help. Some would argue that the record isn’t the same, so the copy should get a new key. Let’s say we do that:

| id | first name | last name | age |

|---|---|---|---|

| 5jd93kdf | Jane | Do | 56 |

Indeed, now we can say: 5jd93kdf prov:wasDerivedFrom dfvsrg3r. Problem solved, right? Not really. We made an error in the copy of the record. The last name changed from Doe to Do. Now, how will we know if we are still talking about the same person? The ID has changes and the last name has changed. Further more, if we have another table with addresses. This table would typically have a foreign key referring to the ID of original table. So that information would get lost also.

The problem here, is that the record of the thing, is separate from the thing it self. In this case, record of Jane Doe, is not the same as Jane Doe. A record can be copied, a person not.

One easy solution (in tabular data) is to add a record id to every record:

| record id | id | first name | last name | age |

|---|---|---|---|---|

| fj295hs8 | dfvsrg3r | Jane | Doe | 56 |

When we make a copy of the record, we give it a new record id. We can now express data provenance, and the table with addresses can still point to the id column which now uniquely and universally refers to a person also known by the name “Jane Doe” and persists after copying.

Data registration process and sampling bias

When looking at data sets, we have a tendency to assume we are looking at all the data that is suggested to be in the data set. However, as the number of entities we are tracking grows, the completeness aspect of data quality becomes practically impossible achieve: you simply loose stuff. Organizations specify the process in which data is collected. These processes often recognize that they produce incomplete results: we aim for 99% (or 99.9% or 6 sigma etc) completeness. Yet looking at the way the process is designed, can give us clues about which cases typically get overlooked. Below are some examples and how we eventually figured them out.

Survivorship bias: data of only the things you have access to

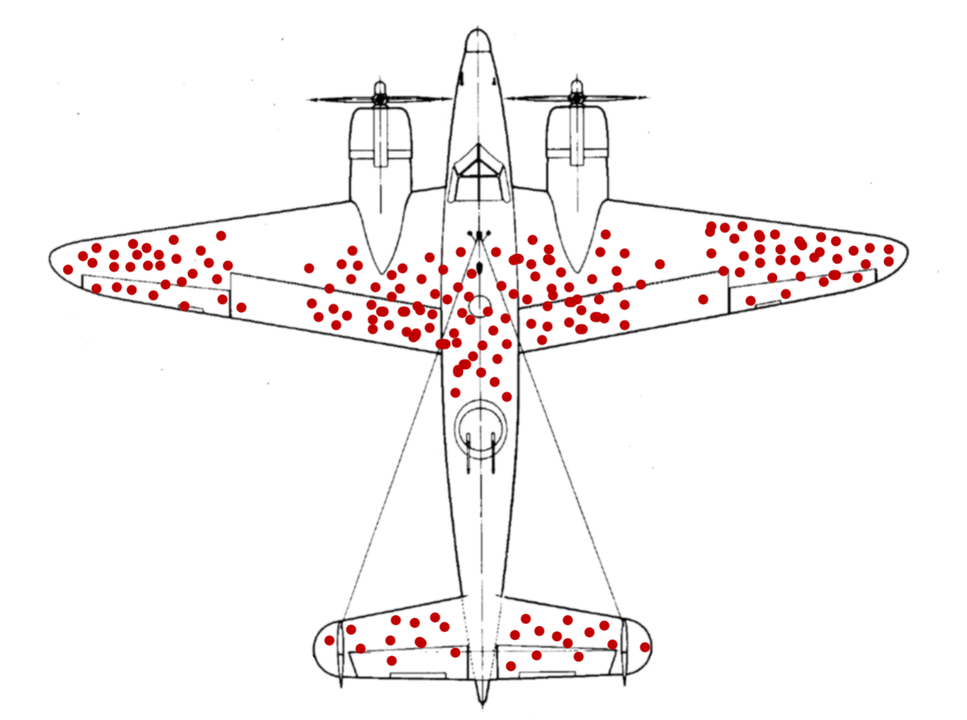

A famous example of this pattern is called survivorship bias. A nutshell example is that when the allies during WWII were trying to determine how to fortify their aircraft, they made a map of bullet holes they had found in the aircraft. They got a dataset that looked something like this (artist impression, not the real data):

The first reflex was to fortify the airplanes in those places where most of the bullets landed. Until someone pointed out that they were not looking at all the planes. The data only represented those planes who had managed to return. So looking at where these planes didn’t get hit would maybe be more insightful. And indeed, looking at the data, you see that things like the engines, and the cockpit got suspiciously few hits if you assume a uniform distribution of bullet holes.

Blue-shift or red mirrage: data that is temporarily incomplete

In the 2020 election we saw another nice example of how only seeing part of the complete set can throw us for a loop. On election night (November 3rd), a naive observer would have been convinced that Trump had won the election by a landslide. After a couple of days (November 7th) the race was called in Biden’s favour instead. Eventually, Biden would win the race by more that 7 million votes and 306 electoral votes: by no means a narrow victory.

In this case, counting ‘all’ the votes was done in different processes, in different orders. This meant that the representativeness of the sample compared to the complete set changed over time. I a world where people have gotten accustomed to near real time data, we can sometimes draw conclusions too early.

This example also shows that you can anticipate these patterns: indeed most of the media had already pointed out that this would happen before election night, and as a consequence they were vary careful not to call the election too early.

P-Hacking

P-hacking is a process in which, in a nut shell, data gets removed from the analysis data set by classifying it as an anomaly. Removing anomalous data from a data set is often a process of data quality processes: we expect data to look a certain way and when it doesn’t we think of it as wrong.

However, we don’t always know why data looks the way it does. And frequently, this results in us wrongly classifying data as anomalous. When this happens, we aren’t improving our data: we make it match our expectations of it. Our bias creeps in and data no longer reflects reality, but rather our perception of it.

A trick to finding exceptions

There is a recurring pattern to these examples. The concept of ‘airplane’ seems pretty well defined: we can point at them. So the set of things that are airplanes is pretty easy to conceive of. Similarly: the set of al cast votes should leave little ambiguity.

In both cases, we can define attributes, or properties, that beyond classification (airplane, cast vote) determine which records we have access to. In case of the Airplanes: ‘those airplanes who came back’. In the case of the cast votes (depending on the state) ‘cast votes that were sent in by mail’ or ‘cast votes that were cast in person’. Our natural languages and even our formal ones, do not typically contain terms to express these nuances. For understandability’s sake they probably shouldn’t. But these subsets of the total each provide a slight, sometimes crucial, nuance to what we are talking about, or what we are looking at.

The trick is thus to think of what the complete set would be, knowing that you do not have access to it and then to look for those attributes that the data collection/curation process is likely to have a bias for or against.

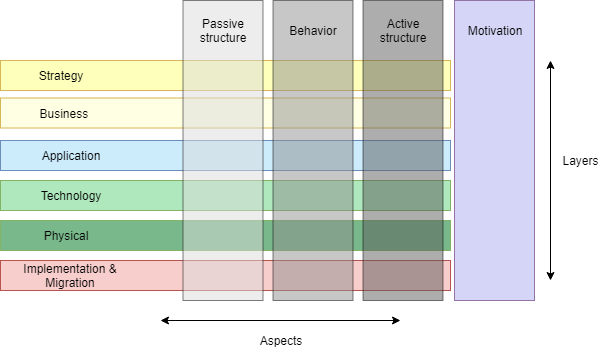

Homonyms across Architectural layers

I find that this pattern of miscommunication ironically occurs most frequently in communication between architects and I have personally fallen for it many times.

In architectural roles, (business-, enterprise-, solution-, data-, any really) we tend to make use of architectural layers to simplify our problem and to help us separate concerns. Many architectural frameworks do this: Archimate, OSI (Open Systems Interconnection) model, the Smart Grid Architectural model, and many more. Looking at them more closely, we see a lot of overlap. Typicall some of these layers include a technology layer, and information layer and a business layer, and depending on the framework they define one or more layers in between those three.

A single concept can have a representation across multiple layers within these architectural frameworks and the terms we use to refer to them are not explicit about which layer they occur at. While these miscommunications can cost a lot of time and energy, they are also relatively easy to identify and resolve.

Conclusion

Miscommunication and misrepresentation cause problems in both the ontological as well as the epistemological processes of any organization. This affects both the ‘business as usual’ as well as the highly innovative processes. Understanding these patterns and checking for them, whether in communication with your colleagues, or in the process of data modelling, will help create a more compact, more shared understanding of what our world looks like. This will make us, as well as our digital technology, more interoperable (better able to work together).

List of common patterns of miscommunication that we have talked about (in no particular order).

- Synonyms across domain specific languages.

- What something is versus what something does or means to us.

- Generalized versus specialized concepts.

- Part-whole relationships.

- Temporal confusion: event vs activity vs state.

- Aboutness.

- Data registration process and sampling bias.

- Homonyms across Architectural layers.